AI has become the fastest-moving force in revenue operations, and every leadership team feels the pressure to capture its upside. But the moment AI touches real workflows, it shines a bright spotlight on every weak link inside the GTM engine.

Processes that felt “good enough” suddenly show their age. Data shortcuts that never caused trouble begin to block meaningful output, and even small misalignments across teams start compounding at scale.

This exposure is powerful because AI unapologetically demands operational clarity. The more an organization pushes toward AI-driven outcomes, the more it must strengthen its routing logic, data lineage, enrichment standards, and decision frameworks.

It’s no rocket science that the companies that take this seriously unlock momentum quickly.

Their AI models become more accurate, their automation becomes more reliable, and their teams experience smoother handoffs with fewer points of friction.

And this is where the real story begins because once AI exposes everything that slows a revenue engine down, the next question becomes far more interesting: how do the most effective teams turn that exposure into their biggest operational advantage?

The ROI mirage: Why AI looks better on paper than in operations

AI projections often assume ideal conditions like clean data, unified systems, and disciplined workflows. Real revenue engines rarely operate that neatly.

The moment AI enters production, these assumptions collide with fragmented processes and inconsistent data standards.

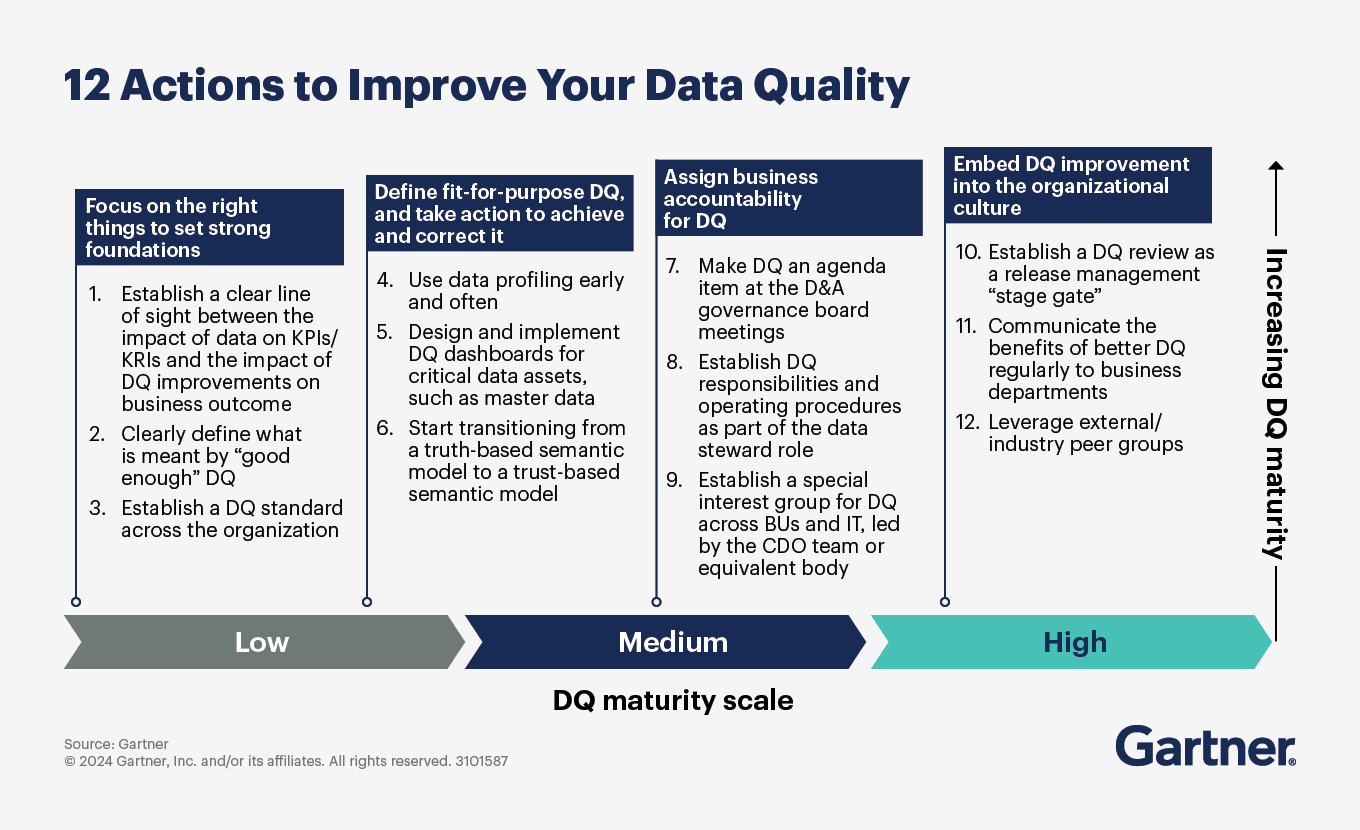

Data quality plays a major role. Even small inconsistencies influence scoring accuracy, routing, and next-best-action models. Gartner estimates poor data quality costs companies $12.9M annually. And when AI runs on top of that environment, the impact compounds.

Workflow fragmentation adds another layer. Marketing, sales, and success often operate with separate rules and definitions.

AI attempts to unify these motions, yet differing processes slow adoption and dilute performance.

💡Discover how HubSpot-Salesforce integration fuels sales-marketing alignment

Teams that calibrate expectations to their actual operational maturity see more predictable outcomes. ROI grows steadily when the foundations become stable enough for AI to make reliable decisions.

Source: Gartner

Key Takeaway: AI ROI strengthens when expectations align with operational reality and when teams invest in the data and workflow discipline AI depends on.

The talent and operating model gap no one budgets for

AI adoption slows when organizations underestimate the operating shift required to absorb AI into everyday decision-making.

Models generate insights at speed, yet teams lack clear ownership, confidence, and decision authority to act on them consistently. Insight velocity increases, while execution velocity stays flat.

This gap stems from how roles and decision flows are designed. Most revenue teams still operate with responsibilities built for manual analysis and linear approvals.

AI introduces probabilistic signals, confidence scores, and dynamic recommendations, but ownership over these signals remains unclear. As a result, decisions get hindered, managers intervene, and teams revert to familiar patterns.

This challenge is widely documented. As per the World Economic Forum’s report, 44% of workers’ core skills are expected to change within five years, largely driven by AI and automation.

Inside GTM teams, this varying skill level and structure gap creates predictable friction:

- AI surfaces insights without clear accountability for action

- Reps receive recommendations without guidance on confidence thresholds

- Managers preserve legacy approval paths, slowing execution

- AI remains advisory instead of becoming embedded in workflows

Thus, the need of the hour is to redesign how decisions travel through the organization. And then you will be able to define who acts on AI signals, how human judgment strengthens outcomes, and how feedback improves future recommendations.

AI becomes part of the operating model rather than an overlay on existing roles.

Key takeaways: AI adoption accelerates when operating models and skills evolve with the technology.

Why AI pilots work but real rollouts struggle

AI pilots often show strong early results because they run in controlled, tidy environments. Teams pick clean datasets, restrict workflows, and confine use to a small group of users. This artificially reduces complexity. However, it doesn’t reflect real-world GTM operations.

Once AI expands into full business use, variability explodes. Processes differ by segment, role, region, or product. Exceptions accumulate, definitions drift, and even small inconsistencies disrupt how AI interprets signals, leading to unpredictable outputs.

Several recurring patterns cause problems:

- Pilot teams follow streamlined processes, but the broader org doesn’t.

- Isolated workflows break when exposed to multiple systems and data sources.

- AI becomes brittle when human behavior varies across teams.

- Insights that worked in one motion fail to generalize across the entire funnel.

Data underscores this reality! According to a 2025 study by Fivetran, 42% of enterprises report that more than half of their AI projects have been delayed, under-performed, or failed due to data readiness issues.

This shows how often pilot success becomes a mirage once variability and data complexity enter the equation, and outcomes diverge sharply from expectations.

Also, process fragmentation creates additional drag. GTM functions often operate with definitions and rules created years apart, leading to:

- Conflicting metrics across teams

- Handoffs that depend on tribal knowledge

- Exceptions that break automation logic

System-level inconsistency compounds everything. CRMs, MAPs, support tools, and enrichment providers each hold partial truths. AI struggles to perform when the underlying architecture is misaligned, leaving teams to fix outputs manually.

Organizations that treat pilots as signals and use them to map out full-system readiness build a foundation for reliable, scalable AI adoption.

Key takeaways: Pilot results highlight possibilities; stable operations unlock real, sustainable AI value. AI scaling requires infrastructure, governance, and readiness.

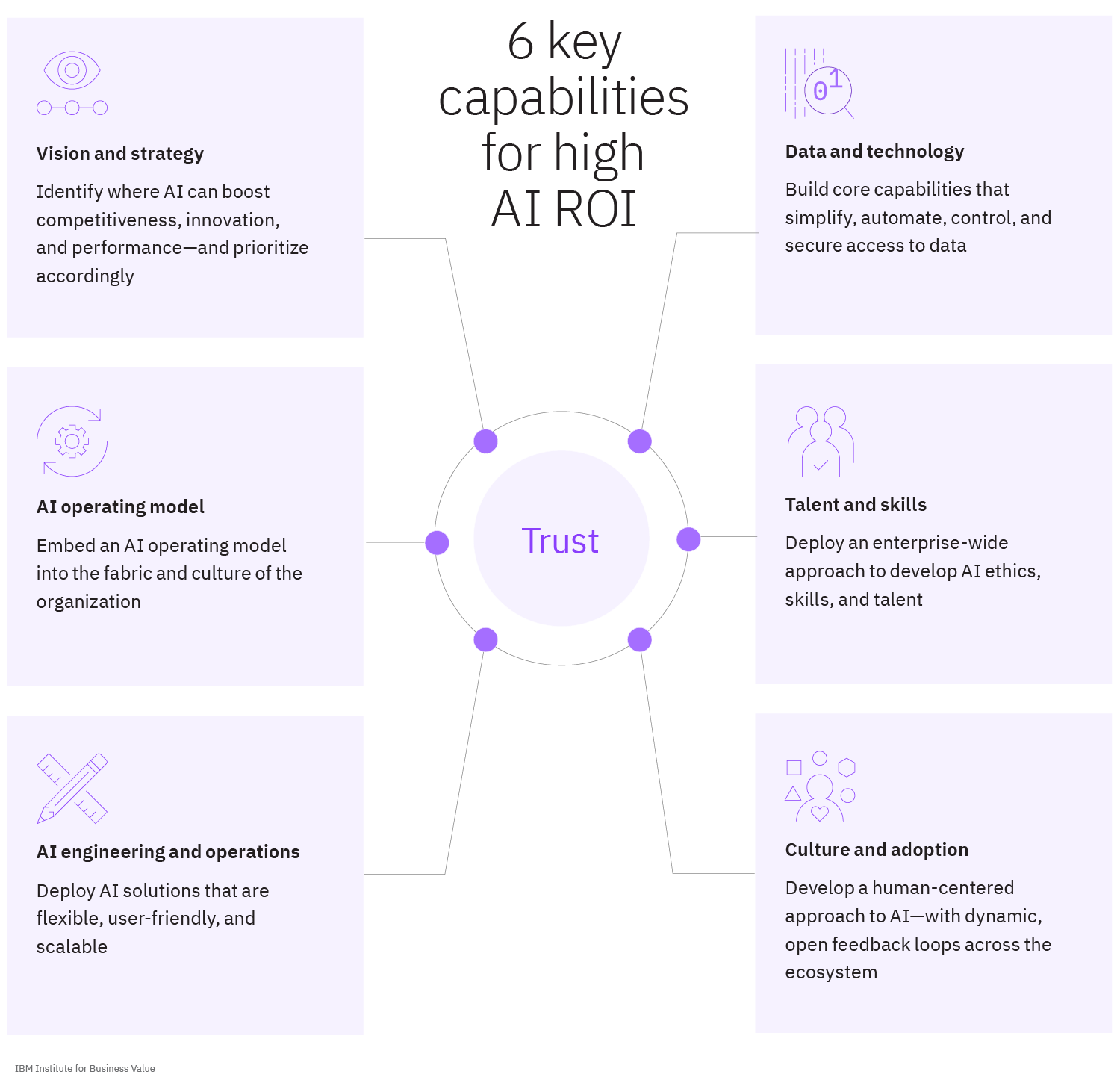

Building the AI capability engine that produces real ROI

AI delivers durable ROI when it operates inside a system designed to learn, adapt, and improve over time.

Tools can accelerate execution, but only a capability engine can sustain performance as complexity grows. This is where AI adoption separates short-term wins from long-term advantage.

To leverage AI’s potential to the fullest, you must treat it as an operating layer woven into how decisions are made, reviewed, and refined. You need to focus less on isolated use cases and more on strengthening the system that surrounds the model.

As that system matures, every AI-driven outcome improves the next one.

As that system matures, every AI-driven outcome improves the next one.

A strong AI capability engine is built on five reinforcing elements:

- Data consistency: shared definitions, validated inputs, and dependable enrichment across the GTM engine

- Decision architecture: clearly defined logic for how signals translate into actions and thresholds

- Governance loops: regular calibration of prompts, models, and rules based on real outcomes

- Execution discipline: workflows are stable enough for automation to perform reliably at scale

- Human-AI collaboration: teams equipped to interpret outputs, apply judgment, and strengthen future recommendations

As these elements connect, AI begins to compound, forecasts sharpen because inputs stabilize, handoffs improve because logic aligns, and experimentation accelerates because feedback loops tighten.

Finally, the system starts producing momentum rather than requiring constant intervention. This is where AI stops being managed and starts shaping how the organization operates.

Source: IBM

Key takeaways: AI generates outsized ROI when it becomes a compounding capability embedded in the operating system.

Teams building this engine move beyond adoption and enter a phase where learning, execution, and advantage reinforce one another faster than manual optimization ever could.

So before anything, ask yourself, on a scale of 1-10, how prepared is your team for AI?

Dashboards and analytics

Dashboards and analytics

.png)